Businesses are defined by how productive they are. The more tasks can be successfully completed on a working day, the faster an organization is moving towards its goals. That’s why many website development and maintenance tasks are already automated.

Browser automation is one of the newest and most promising trends. Yet, many organizations are still struggling to automate tasks that are outside their internal systems. Here, we are going to cover what browser automation is, where you can apply it, and what challenges lie ahead.

The term ‘automation’ used to be associated only with automatic equipment used in manufacturing. Think about the first Fords produced or advanced microprocessors made in production lines. Today, most work takes place in the digital space, so these processes also started to be automated.

Different types of automation are used – from simple Excel formulas to advanced scripts and, most recently, the rise of AI technology. Such automation helps organizations and individuals to be more effective when completing tasks in closed and private systems.

Suppose that a company needs to check hundreds of customer forms and check for basic information – emails, addresses, names, etc., before contacting them. A person might start with such a process and document every step he takes to finish this task.

Once the processes are defined rigorously enough, an automated script can be written utilizing the company’s internal systems and completing the tasks without human intervention. Later, such a process might be optimized further by AI or other tools. The cycle continues when new automation tasks are found.

Such internal workflow automation involves tasks related to systems and communication within the company. Automation gets more complicated when we aim to automate processes outside the internal systems.

Browser automation

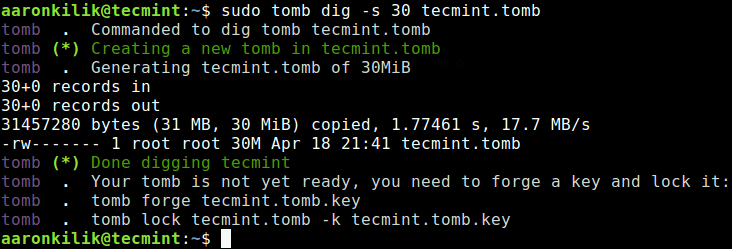

Browser automation (also known as online or web automation) allows taking control of a browser programmatically. It controls a web browser without any graphical interface where one command might result in a series of actions. Such a process helps to automate routine and monotonous tasks for collecting data, testing websites, logging into accounts, and more.

Automated tasks may relate to your own website, but it also allows you to access other websites and perform automated interactions there. They might include clicking buttons, writing text, logging into accounts, and much more. Anything that a human can perform on a web browser can be automated.

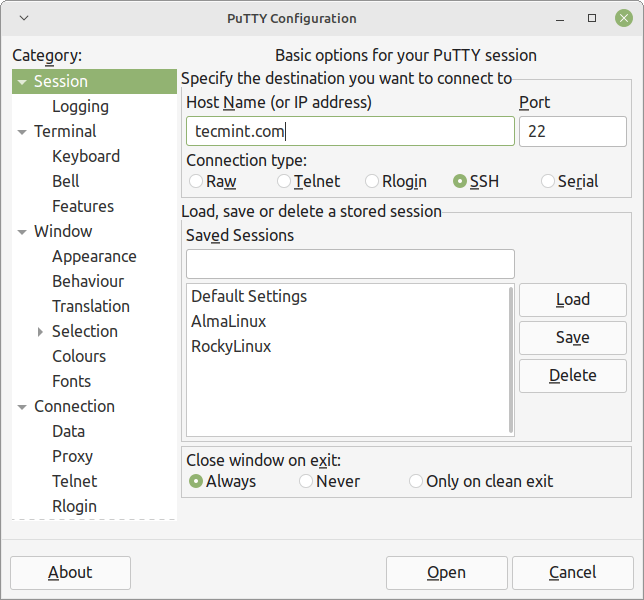

Since automation occurs in a web browser, an essential tool is a headless browser. These are browsers without any graphical interface, allowing you to control other browsers (usually Chromium-based ones) in a command-line interface. Here are some of the most popular options

- How to build a website with WordPress and what are the best plugins to use: WordPress Web Design Tutorials: How to build a website with WordPress and what are the best plugins to use. Building a website with WordPress is an excellent choice due to its versatility, ease of use, and a vast array of plugins that enhance functionality. Here’s a comprehensive guide to building a WordPress website, along with recommendations for the best plugins.

- The Most Important Stages and Plugins for WordPress Website Development: Developing a WordPress website requires careful planning, execution, and optimisation to ensure it is functional, user-friendly, and effective. The process can be broken into key stages, and each stage benefits from specific plugins to enhance functionality and performance. Here’s a detailed guide to the most important stages of WordPress website development and the essential plugins for each stage.

- What are the most powerful Tools for SEO in WordPress?: Powerful SEO Tools for WordPress: Search Engine Optimisation (SEO) is essential for improving your WordPress website’s visibility in search engines. Here are the most powerful tools to optimise your site effectively:

- How to add shipping modules in CubeCart: Step 1: Log in to Your CubeCart Admin Panel: Open your web browser and navigate to your CubeCart admin login page. Enter your username and password to log in.

Step 2: Navigate to the Extensions Section: Once logged in, go to the left-hand menu and click on Manage Extensions. From the dropdown, select Extensions.

Step 3: Find Shipping Modules: In the Extensions section, locate the Shipping tab to view available shipping modules. You can browse through the list or use the search function to find a specific module. - Gathering domain and IP information with Whois and Dig: In the digital age, understanding the intricacies of domain and IP information is essential for anyone navigating the online landscape. This article explores two powerful tools—WHOIS and DIG—that help gather valuable insights about websites and their underlying infrastructure. Whether you’re a cybersecurity professional, a web developer, or simply curious about online resources, you will learn how to effectively utilize these tools, interpret their outputs, and apply this knowledge to real-world scenarios.

- What are the best WordPress Security plugins and how to set them up the best way: Read a comprehensive guide on the best WordPress security plugins and how to set them up to ensure optimal protection for your WordPress site.

- Will a headland provide enough shelter?

- Learn How To Purchase Your Own Domain Name with Fastdot.com: Open your web browser and go to Fastdot.com. Navigate to the Domains section, either from the homepage or from the main navigation bar.

Step 2: Search for Your Desired Domain Name: In the domain search bar, type the domain name you want to purchase. Fastdot supports a wide range of domain extensions (TLDs), such as .com, .net, .org, .com.au, and many others. Click the Search Domain button. The system will check the availability of your desired domain name.

- Selenium

- Playwright

- Puppeteer

- Headless Chrome

- Splash

Some browser automation tools use Robotic Process Automation (RPA) technology, which enables the user to simply record human actions within the graphical interface. In such cases, not much programming knowledge is needed to automate tasks. However, this is more of an exception than a rule.

The most popular browser automation tool, Selenium, requires the user to write code in languages such as Python, JavaScript, or PHP. Entering commands instead of recording them gives much more flexibility and enables tasks that go beyond simple clicking of buttons.

Web application testing

It can take twice as much time to test a website or a program than to code it in the first place. Online automation helps to shorten testing processes. An automated script can run on the website or application, pressing buttons, playing videos, and completing all other tasks much more efficiently than a human ever could.

Web applications ought to be tested from the user’s side, so it’s necessary to perform such tests when the application is already live. It’s not enough to test a website in an internal sandbox. In some cases, testing whether the website will perform under a large load of connection requests is the main aim.

However, the aim of browser automation is more far-reaching in web testing. A headless browser can imitate human interactions with the web application. This is essential for validating the website’s UI/UX components, authentication processes, and other elements.

Web scraping

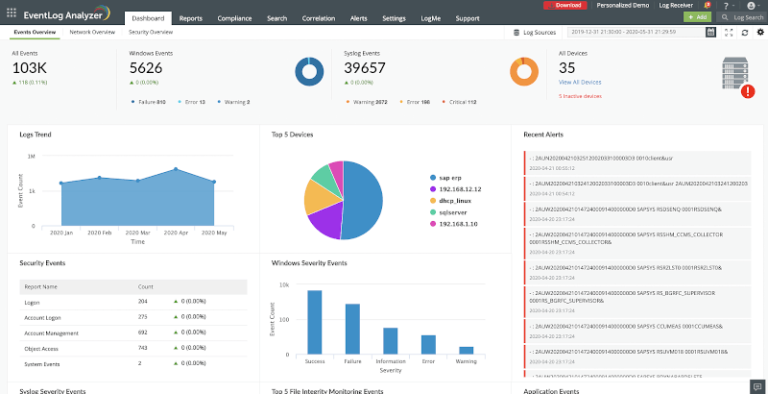

A headless browser, such as Selenium, can be programmed to automate data collection tasks. In such scenarios, it functions as a web scraper, which fetches and extracts data from websites. Such data is often used to monitor competitors, generate leads, brand protection and price tracking.

While dedicated web scraping software exists, using browser automation is a superior option when you need a custom approach. Many websites have dynamic elements, such as endless scroll pages, pop-ups, and interactive forms. Browser automation allows you to extract data from other websites and integrate it into your own with an API.

E-commerce tasks

Automated processes can be used to perform tasks when building an e-commerce website. Managing your seller account on sites like Amazon or eBay can be easily automated with a headless browser. What’s even better is that you can also automate the way you purchase goods before reselling them.

Instead of spending time waiting in a digital queue, you can log in and complete the whole checkout process automatically. It takes seconds for a headless browser to function, so humans have little chance to compete against you. Such automation is the backbone of many online wholesale and dropshipping businesses.

Social media management is automated by marketers who face a set of unique problems when trying to access multiple accounts at once. Scheduling posts and other actions between different time zones, for example, is difficult with the standard user interface most platforms offer.

Almost all platforms also have a limit on the number of accounts one device can access. It creates an unnecessary struggle for agencies managing clients’ accounts or companies with a lot of online presence. Another significant struggle for such professionals is the lack of engagement metrics needed to understand the performance of your campaigns.

Browser automation can solve all of these problems. An automated script can collect needed metrics, schedule posts, and allow using multiple social media accounts at once. It makes the job of a marketer much easier and more efficient.

With a click of a button, one marketer can access dozens of accounts and trigger the needed actions. A headless browser can automate likes, posts, private messages, descriptions, and everything else.

Most websites view browser automation as harmful and want to prevent it. There are a couple of reasons why this is the case.

- Performance issues. Too many requests from bots can overload servers, and improving the performance is expensive.

- Data control. Protect their data and sources of monetization.

- Security. Bad actors might use similar means to attack the website’s infrastructure with DDoS or similar attacks.

- Enforcing terms and conditions. Many websites restrict scripts and bots because it’s against what they consider a fair use of their services.

However, most of the online automation examples we mentioned earlier can be applied legitimately without causing harm to the target websites. For example, web scraping can be performed with publicly available data. Nevertheless, websites may still impose restrictions on browser automation measures, and you’ll need to overcome such challenges.

Most restrictions websites impose are related to IP addresses. It’s a unique string of characters that identifies visitors’ geo-location and helps to track them. One measure is to block certain IP addresses or impose geo-restrictions.

This is a challenge for browser automation efforts since a part or a whole website might not be accessible via a headless browser. However, banning strings of IPs might affect other users, and that’s why measures such as CAPTCHA tests are more common.

CAPTCHAs are designed to determine whether a visitor is a human or an automated script (bot). Using only one IP address to perform browser automation might lead to it getting flagged, which results in constant CAPTCHA tests disrupting your workflow.

Changing your IP address with a residential proxy server is the best way to avoid such restrictions. Providers like IPRoyal offer proxies hosted on ordinary household devices with IPs verified by an Internet Service Provider (ISP). Such IPs are no different from ordinary users and will allow your browser automation efforts to remain undetected.

Other types of proxies, such as Datacenter ones, might also work to shield your IP. But the IP legitimacy of residential proxies is the best way to overcome browser automation’s challenges. Tasks like social media management are hardly possible without them.

Measures to limit the number of your requests and generally abide by the website’s rules must stay in place to remain undetected. While browser automation might remain challenging, it’s much worth the effort for efficiency improvement.

We have come a long way since only the tasks on internal systems were completed programmatically. Whether browser automation will reach the same level of adoption

Author

Equally known for her brutal honesty and meticulous planning, Simona Lamsodyte has established herself as a true professional with a keen eye for detail. Her experience in project management, social media, and SEO content marketing has helped her constantly deliver outstanding results across various projects. Simona is passionate about the intricacies of technology and cybersecurity, keeping a close eye on proxy advancements and collaborating with other businesses in the industry.